The shift in audio AI is underway — from narrow, task-specific systems to unified, foundation-level architectures. Just like NLP had its LLM moment, audio is entering its own revolution.

Kimi-Audio is a major leap: an open-source, real-time, instruction-following foundation model for audio understanding, generation, and conversation — all within one architecture.

🧠 What makes it different?

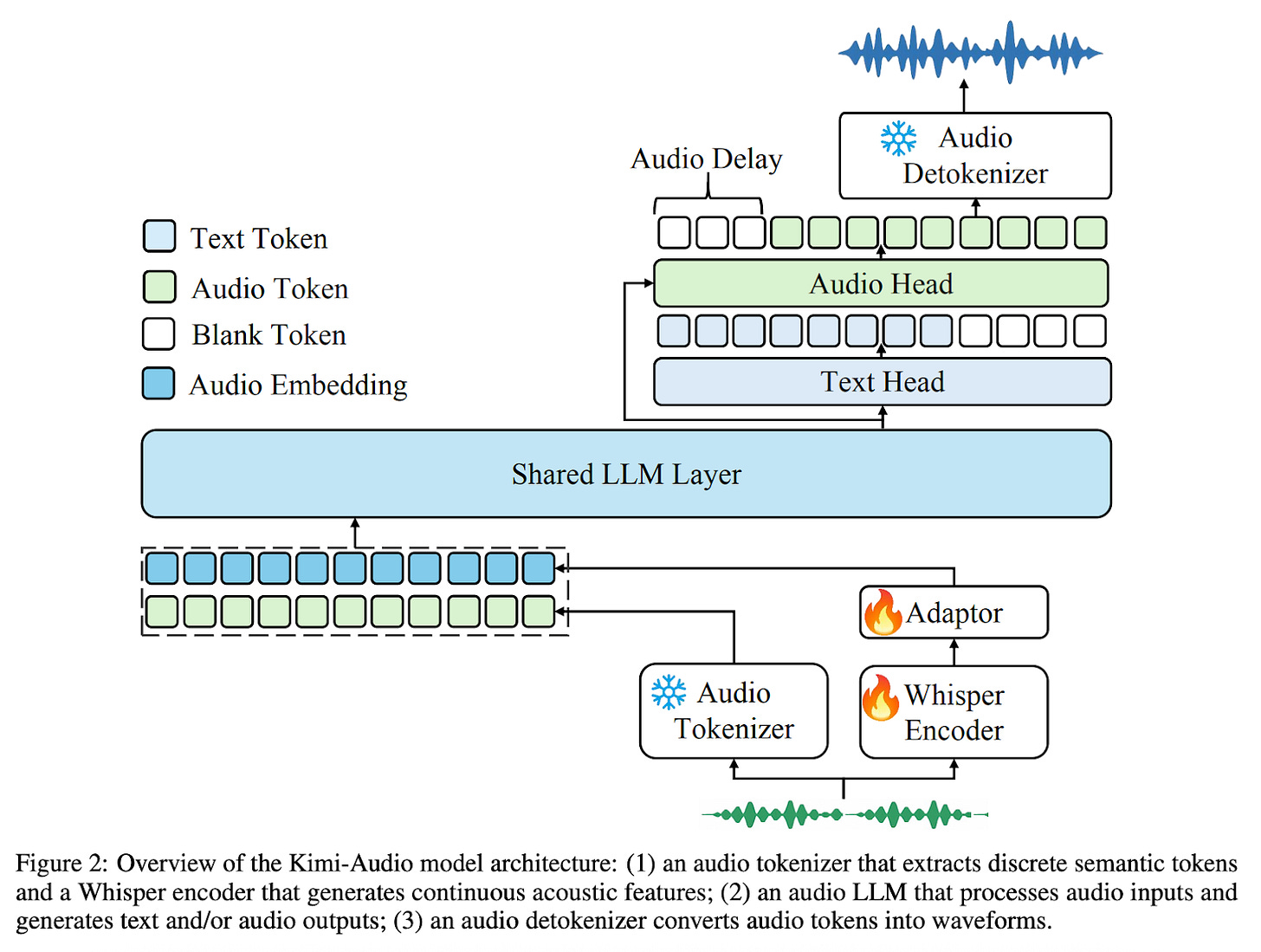

Kimi-Audio uses a hybrid input representation to bridge text and audio:

• Discrete semantic tokens (12.5Hz) from an ASR-based tokenizer

• Continuous acoustic vectors from Whisper, also downsampled to 12.5Hz

These are fed into a shared transformer initialized from a large language model, with:

• Dual heads for generating both text and discrete audio tokens

• A streaming detokenizer for real-time synthesis using flow matching + BigVGAN

• A novel look-ahead mechanism that smooths audio at chunk boundaries

The result: low-latency, high-quality, multimodal generation from a single, unified system.

🚀 Why it matters

Kimi-Audio changes how we build audio systems:

• Unified architecture replaces separate ASR, TTS, and sound models

• Real-time, instruction-following capabilities open new application domains

• Designed to support diverse data: speech, sound, music, and text

• Open-source release promotes transparency, reproducibility, and extensibility

This model is part of a broader vision for general-purpose audio intelligence — where models can hear, understand, and speak in natural, context-aware ways.

🛠️ Built for the community

Kimi-Audio was pre-trained on 13M+ hours of audio and fine-tuned on 300K hours of labeled tasks. It comes with:

• Full codebase

• Released model checkpoints

• Evaluation toolkit

🔗 Explore: https://github.com/MoonshotAI/Kimi-Audio